Inductive Bias in Machine Learning: Igniting Success

Preface

In the domain of man-made consciousness, AI remains an incredible asset, changing ventures and our day-to-day routines. Nonetheless, a basic issue that has gathered broad consideration is the presence of predisposition in these calculations. This editorial dives profoundly into the idea of predisposition in AI, its starting points, and its extensive ramifications.

We describe bias and inductive bias in machine learning under these criteria:

- What is bias in machine learning?

- Types of bias in machine learning

- Bias in Machine Learning: Examples

- Addressing Bias in Machine Learning

- Inductive Bias in Machine Learning

- What is inductive bias?

- Types of Inductive Bias

- The Role of Data in Inductive Bias

- How to Distinguish Bias in Machine Learning

- Data Bias in Machine Learning

- Types of Data Bias

♦ What is bias in machine learning?

With regards to AI, inclination alludes to the methodical blunder that keeps a model from precisely addressing the hidden connection between the information highlights and the objective variable. This can prompt expectations that are reliably asked for in a specific course.

Bias in machine learning models can perpetuate or even exacerbate existing societal biases. It is imperative to address and mitigate such biases for fair and equitable applications.

♦ Types of bias in machine learning

There are two main types of bias in machine learning:

Bias due to underfitting:

This occurs when a model is too simple to capture the complexity of the underlying data. It fails to learn the true relationship and ends up making overly simplistic predictions.

Bias due to data imbalance or skewed data:

This happens when the preparation information doesn’t precisely address the population being demonstrated. For instance, if a dataset is intensely slanted towards one class, the model could become one-sided towards foreseeing that class all the more habitually.

♦ Predisposition in AI: Models

In the domain of innovation and man-made brainpower, AI calculations have ascended as essential assets fit for purely deciding expectations in light of information. In any case, they are not reliable. One basic issue that has come to the fore is the presence of a tendency toward this deviousness.

This predisposition can prompt uncalled-for and unfair results, supporting current cultural differences. In this article, we will dig into a few instances of predisposition in AI, revealing insight into the triggers that should be addressed.

Types of Bias

- Algorithmic Bias

Algorithmic bias refers to the methodical and repeatable mistakes in a computer system that create partial outcomes. This bias is often a result of biased training data or the inherent limitations of the algorithm itself.

- Selection Bias

Choice inclination happens while the preparation information used to foster an AI model isn’t illustrative of the more extensive populace. This can prompt slanted results that don’t precisely reflect reality.

Facial Recognition Technology

- Racial Biases

Facial recognition systems have shown significant racial biases, with higher error rates for people of color, particularly for women.

- Gender Bias

These systems also exhibit gender biases, often misidentifying transgender and gender-nonconforming individuals.

Criminal Justice Systems

- Sentencing Disparities

Machine learning algorithms used in criminal justice systems have demonstrated biases in sentencing, with harsher penalties for minority groups.

- Predictive Policing

Predictive policing algorithms have been criticized for reinforcing existing biases in law enforcement practices.

- Gender and Racial Biases

Machine learning algorithms used in hiring processes have been found to favor certain genders or racial groups, perpetuating inequalities in the workplace.

- Socioeconomic Biases

These algorithms can also inadvertently discriminate against candidates from lower socioeconomic backgrounds.

Fairness Measures

- Pre-processing Techniques

Data preprocessing methods, such as re-sampling and re-weighting, can help mitigate bias in training data.

- Post-processing Interventions

After a model is trained, interventions can be applied to adjust predictions and ensure fairness.

- Industry Standards

The tech industry is moving towards establishing ethical guidelines for the development and deployment of machine learning models.

- Government Regulations

Governments are also taking steps to implement regulations that address bias in AI systems.

♦ Addressing Bias in Machine Learning

Addressing bias in machine learning is critical for creating impartial and just technological systems. As we keep on depending on computer-based intelligence in different parts of our lives, we should take a stab at reasonableness and inclusivity.

Strategies like gathering more assorted and agent information, utilizing more perplexing models, and utilizing decency-mindful calculations are far from relieving predisposition in AI frameworks

♦ Inductive Bias in Machine Learning

In the field of AI, the idea of inductive predisposition plays a crucial role in molding the educational experience of calculations. This article delves into the complexities of inductive predisposition, its importance in preparing models, and what it means for the dynamic cycle.

♦ What is inductive bias?

Inductive bias refers to the set of assumptions that a learning algorithm uses to make predictions about unseen data based on its training data. It guides the model towards making generalizations from the observed data, allowing it to perform well on new, unseen examples.

♦ Types of Inductive Bias

Occam’s Razor: Simplicity is Key

One of the most essential predispositions is Occam’s Razor. It proposes that easier speculations are bound to be right than complex ones. With regards to AI, this implies inclining toward easier models over excessively complex ones.

Bias-Variance Tradeoff

The bias-variance tradeoff is a crucial aspect of inductive bias. It balances the tradeoff between the model’s ability to represent the underlying complexity of the data and its tendency to overfit or underfit.

◊ Domain-Specific Bias

- Prior Knowledge Integration

Domain-specific bias involves incorporating prior knowledge about the problem domain into the learning process. This can enhance the model’s performance by providing it with valuable insights.

◊ Learning Algorithm Bias

- Algorithmic Preferences

Different learning algorithms exhibit varying biases. For instance, decision tree algorithms tend to create easily interpretable models, while neural networks are adept at capturing complex relationships.

♦ The Role of Data in Inductive Bias

Data Sampling and Bias

The quality and representativeness of the training data have a direct impact on the inductive bias. Biased or skewed data can lead to models that perform poorly in real-world scenarios.

Transfer learning leverages knowledge gained from one task to improve performance on another. Understanding the inductive bias is crucial for effectively applying transfer learning techniques.

♦ Challenges and Considerations

Adapting to Dynamic Environments

Inductive bias can become a challenge when faced with dynamic or rapidly changing data. Models may need to adapt their biases to stay relevant.

Ethical Implications

Inductive bias forms the cornerstone of machine learning, influencing how models generalize from data. Recognizing and understanding the biases inherent in learning algorithms is essential for developing effective and ethical machine learning systems.

♦ How to Distinguish Bias in Machine Learning

In the rapidly embryonic landscape of artificial intelligence and machine learning, detecting and mitigating bias has become paramount. Bias in machine learning models can lead to unfair and prejudiced outcomes, preserving existing social inequalities. This article will guide you through the process of identifying and addressing bias in machine learning algorithms.

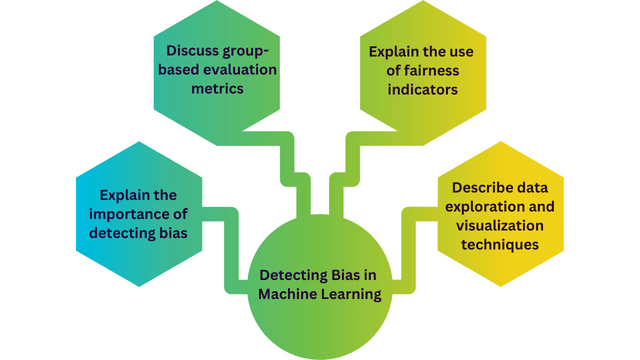

♦ Detecting Bias in Machine Learning

- Data Distribution Analysis

Begin by visualizing the distribution of your training data. Look for disparities in the representation of different groups. Tools like histograms and scatter plots can be invaluable for this task.

- Class Imbalance Assessment

Check for class imbalances in the dataset. If one group is significantly underrepresented, the model may struggle to make accurate predictions for that group.

Model Performance Evaluation

Utilize group-specific evaluation metrics to assess how well the model performs for different subgroups. This can highlight any discrepancies in prediction accuracy.

- Fairness Indicators

Implement fairness indicators to quantify and visualize bias in the model’s predictions. These metrics provide insights into the disparate impact on various groups.

♦ Mitigating Bias

Data Augmentation and Balancing

- Over sampling and Under sampling

Address data imbalances by oversampling the minority class and under sampling the mainstream class. This helps create a more representative training set.

Feature Engineering

- Sensitive Feature Removal

Consider removing sensitive features that could potentially introduce bias. Alternatively, use techniques like adversarial debiasing to mitigate their influence.

Detecting and mitigating bias in machine learning models is a critical step towards building fair and equitable AI systems. By employing a combination of data exploration, model evaluation, and targeted interventions, we can work towards creating algorithms that benefit all segments of society.

♦ Data Bias in Machine Learning

In the rapidly progressing domain of man-made reasoning and AI, information shapes the bedrock whereupon calculations work. In any case, a basic worry that has arisen lately is the presence of information predisposition. This phenomenon can significantly impact the outcomes and applications of machine learning models. This article delves into the intricacies of data bias, its implications, and potential mitigation strategies.

♦ Unpacking Data Bias

Data bias refers to the systematic error in a machine learning model’s predictions due to skewed training data. It arises when the training data is not representative of the real-world population it aims to model. This deviation can result in unfair or inaccurate predictions, particularly for underrepresented groups.

◊ Types of Data Bias

Selection Bias

Selection bias occurs when the training data is not a random sample of the population. For instance, in a healthcare dataset, if only data from a specific demographic is included, it may lead to biased predictions for other groups.

Confirmation bias arises when the data used for training reinforces existing stereotypes or beliefs. If the data predominantly portrays a certain group in a particular light, the model may perpetuate these biases.

Temporal Bias

Temporal bias stems from changes in the target variable over time. If the training data spans different periods, the model may struggle to adapt to new trends, leading to biased predictions.

◊ Implications of Data Bias

Data bias can exacerbate existing societal inequalities. For example, biased criminal justice models may unfairly penalize certain demographics, perpetuating an unjust system.

Diminished Trust

When users perceive bias in machine learning outputs, trust in these systems is eroded. This lack of trust can hinder the widespread adoption of AI-powered technologies.

Ethical Considerations

Data bias raises profound ethical questions. Developers and organizations must grapple with how to rectify biased models and ensure fairness and justice in their applications.

◊ Mitigating Data Bias

Diverse and Representative Data Collection

To combat data bias, it is imperative to collect a diverse and representative dataset. This entails ensuring that all relevant groups are adequately represented.

Preprocessing techniques such as data augmentation and re-sampling can help mitigate bias. By carefully curating and preparing the training data, developers can reduce the impact of skewed information.

Continuous Monitoring and Feedback Loops

Models should be subjected to continuous evaluation for bias, and feedback loops should be established to refine the algorithms over time.

Data bias in machine learning is a critical issue that demands attention. Understanding its nuances, implications, and potential mitigation strategies is paramount for the responsible development and deployment of AI technologies.

FAQs

Q1. How can bias in machine learning impact real-world applications?

Ans: Bias in machine learning can have profound effects on real-world applications. For example, in hiring processes, biased algorithms may favor certain demographic groups, perpetuating existing inequalities. In healthcare, biased algorithms could lead to incorrect diagnoses or treatment recommendations for certain populations.

Q2: How might one decide the proper degree of inductive predisposition for a particular undertaking?

Ans: Finding the right equilibrium of inductive predisposition requires trial and error and adjusting because of the idea of the information and the intricacy of the errand.

Q3. Can bias in machine learning be eliminated?

Ans: No, but it can be significantly reduced through careful data handling and algorithmic choices.

Q4. What are some common sources of bias in machine learning?

Ans: Biased training data, skewed representation, and algorithmic limitations are common sources of bias in machine learning.

Q5. Why is detecting bias in machine learning important?

Ans: Detecting bias ensures that machine learning models make predictions without favoring any particular group, promoting fairness and equity.

Q6. How does data bias affect real-world applications of machine learning?

Ans: Data bias can lead to inaccurate and unfair predictions, reinforcing existing inequalities and diminishing trust in AI systems.

Get access all prompts: https://bitly.com/xu4n