Feature Selection Techniques in Machine Learning

Preface

In the dynamic field of machine learning, selecting the proper features is analogous to selecting the right tools for a task. It is a critical phase that has a direct impact on the effectiveness of your model.

This edition delves deep into the area of feature selection strategies, providing you with a thorough guide to making informed decisions while creating robust machine learning models.

♦ The Significance of Feature Selection

When dealing with datasets, using all available data and columns is often unneeded and can lead to issues like overfitting and underfitting.

These difficulties can be mitigated by carefully selecting important features that have a real impact on the model’s performance. Essentially, it is a matter of locating the sweet spot—the subset of data that enhances predicted accuracy.

♦ Supervised vs. Unsupervised Learning

Before getting into specific strategies, it’s important to grasp the two main types of feature selection: supervised and unsupervised learning.

Labeled data is used to train algorithms and anticipate outputs for new, unseen data in supervised learning. Unsupervised machine learning, on the other hand, works with unlabeled data to uncover hidden patterns.

Supervised Feature Selection Techniques

The target variable, or the outcome you wish to predict, guides the selection of relevant features in supervised feature selection. We’ll go over three techniques:

- Filter-Based Approach

- Wrapper-Based Approach

- Embedded Approach

♦ Delving into Filter-Based Feature Selection Techniques

Filter-based techniques assess the worth of each feature without taking into account the effectiveness of a specific machine learning algorithm. Among these techniques are:

This method is similar to sorting toys by color or shape to find the most fascinating ones. It aids in the identification of the most informative features in your dataset. It measures the level of information a characteristic contributes to the prediction by utilizing mathematical computations.

Chi-Squared Test: Unveiling Relationships

Similar to correlating unrelated events, this statistical test helps discern if features are related or occur by chance. It measures the independence of features from the target variable and is particularly useful for categorical data.

Fisher’s Score: Recognizing Significance

Fisher’s Score, which assigns points to characteristics depending on their relevance, aids in identifying the most important parts of a dataset. It identifies the features with the greatest impact on the target variable by measuring their statistical significance.

This technique determines how many features have missing data, which is crucial for model accuracy. It’s like counting puzzle pieces; if too many are missing, completing the puzzle becomes challenging, and similarly, features with extensive missing data might not contribute effectively to the model.

♦ Unraveling Wrapper-Based Feature Selection Techniques

Wrapper-based approaches identify the most essential features in a dataset by employing specialized machine learning models. Here are the four major types:

This technique starts with one feature and incrementally adds more to achieve the most accurate results. It’s akin to assembling a team of players; each addition should enhance overall performance.

Backward Selection: Streamlining Significance

The reverse of forward selection begins with all features and selectively removes those that don’t contribute to model accuracy. It’s about trimming excess to focus on the essentials.

Exhaustive Feature Selection: Covering all Bases

This method assesses all potential feature combinations to determine the subset that performs best for a certain machine learning model. It’s a comprehensive approach that ensures no possible contribution is neglected.

This method begins with a subset of features and subsequently adds or removes features according to their relevance. It’s about finding the optimal team configuration for peak performance.

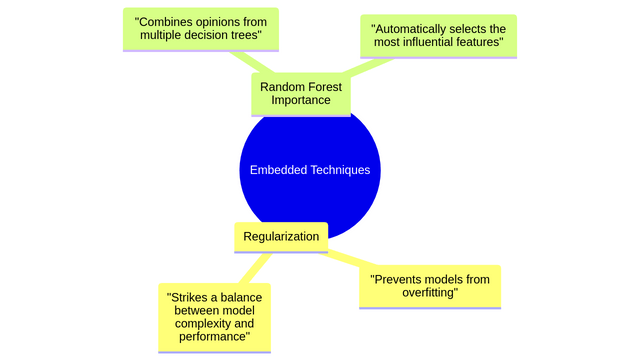

♦ Embedded Feature Selection Techniques: Integrating Insight

Embedded techniques embed feature selection into the learning algorithm, selecting the most essential elements automatically. There are two distinct strategies:

Regularization: Striking a Balance

This method prevents models from getting too complex and overfitting the data. It’s analogous to utilizing guardrails to retain control and keep the model from becoming overly complex.

This technique discovers the most influential aspects by combining opinions from several decision trees. It’s similar to collecting counsel from a varied set of specialists and combining their thoughts for a well-informed decision.

♦ Navigating Unsupervised Feature Selection Techniques

Unsupervised techniques do not rely on labeled data. Instead, they use algorithms to find patterns and similarities in the data. These techniques include:

Principal Component Analysis (PCA): Streamlining Complexity

PCA simplifies data by identifying the most important components, like finding key elements in a complex picture. It’s about distilling complexity down to its core elements.

ICA separates mixed data into its individual components, akin to picking out voices in a crowd. It’s about isolating distinct signals from a sea of noise.

Non-negative Matrix Factorization (NMF): Deconstructing Magnitudes

NMF breaks down large numbers into smaller positive numbers, revealing the essential parts of a whole. It’s akin to disassembling a complex puzzle into its fundamental pieces.

T-distributed Stochastic Neighbor Embedding (t-SNE): Visualizing Similarities

t-SNE helps visualize and explore similarities in data, creating a map of related items. It’s about putting similar elements in close proximity on the map, providing a clear visual representation of data relationships.

An autoencoder is a sort of artificial neural network that learns how to replicate objects. It’s like educating the machine to replicate the painting so it may learn to draw the animal on its own. Autoencoders are important for teaching robots to understand and recreate tasks such as drawing graphics or images.

Conclusion: Empowering Model Accuracy

To summarize, feature selection is an important part of developing accurate machine learning models. You can avoid the complications of overfitting and underfitting by using these approaches. Choose the technique that best meets the needs of your project, and watch your models thrive. Feature selection is, undoubtedly, the foundation of strong and effective machine learning.

FAQs

◊ Why is feature selection important in machine learning?

Feature selection is critical since it aids in identifying the most significant data for model correctness, hence enhancing the performance of machine learning models.

◊ What makes supervised feature selection different from unsupervised feature selection?

Supervised feature selection uses labeled data to guide the selection of relevant features, while unsupervised feature selection does not rely on labeled data and identifies patterns independently.

◊ How does filter-based feature selection work?

Finding the most informative features is made easier by filter-based techniques, which assess each feature’s worth independently of how well a particular machine learning algorithm performs.

◊ What are wrapper-based feature selection techniques?

To identify the most crucial features in a dataset and maximize accuracy, wrapper-based approaches use certain machine learning models.

◊ How do embedded feature selection techniques differ from other methods?

Embedded approaches include feature selection into the learning process itself, recognizing the most essential features during model training automatically.

Get access all prompts: https://bitly.com/xyz